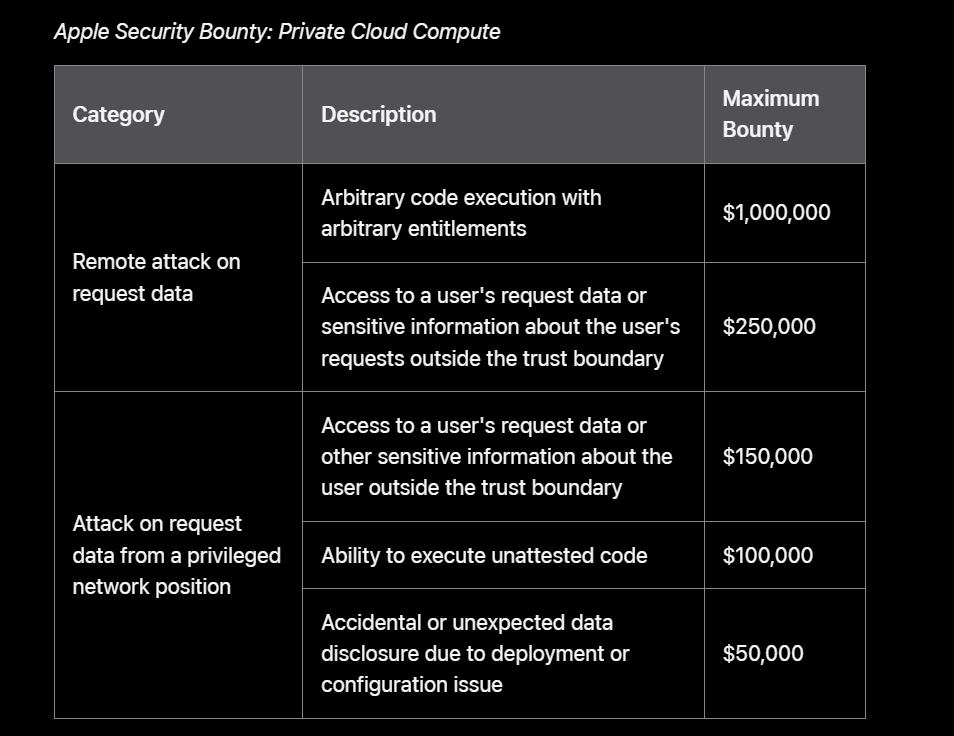

Yes, you heard correctly. Apple announced that it will pay up to $1 million to security experts to identify flaws that might compromise the security of its private AI cloud.

Additionally, Apple announced that researchers might receive up to $250,000 for privately reporting flaws that could retrieve sensitive user data or requests that users submit to the company’s private cloud.

Apple stated in a post on its security blog that it would provide a maximum $1 million reward to anyone who discovered weaknesses that allowed harmful code to be remotely executed on its Private Cloud Compute servers.

“We award maximum amounts for vulnerabilities that compromise user data and inference request data outside the [private cloud compute] trust boundary,” Apple said.

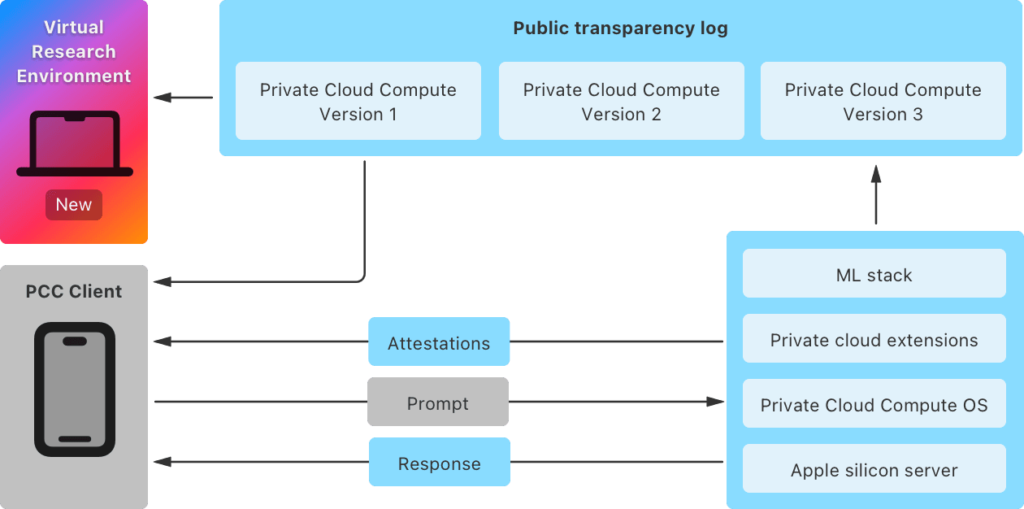

Virtual Research Environment

The Virtual Research Environment, according to Apple, is a collection of tools that let anyone run their “own security analysis of Private Cloud Compute” directly on their Mac. The instruments are used for:

- Enumerate and examine PCC software updates.

- Check that the transparency log is consistent.

- Get the binaries that go with each release.

- Launch a release within a virtualised setting.

- Analyse inference using models for demonstration.

- Debug and alter the PCC program to allow for more thorough research.

Private Cloud Compute

We’re also making available the source code for certain key components of PCC that help to implement its security and privacy requirements. We provide this source under a limited-use license agreement to allow you to perform deeper analysis of PCC.

Additionally, Apple is making public the source code for “certain essential components” of Private Cloud Compute.

A variety of PCC domains are covered by the projects for which Apple is making source code available, including:

- The task of creating and verifying the confirmation for the PCC node falls to the CloudAttestation project.

- The privatecloudcomputed daemon, which is part of the Thimble project, employs CloudAttestation to impose verifiable transparency on a user’s device.

- To prevent unintentional data exposure, the splunkloggingd daemon restricts the logs that can be released from a PCC node.

- The VRE tooling is contained in the srd_tools project, which you may use to learn how the VRE makes it possible to run PCC code.