Hello, readers of HackingBlogs! Welcome to the sixth day of our 10-day, totally free Bug Bounty training! My name is Dipanshu Kumar. You should have a solid understanding of the fundamentals by now, but today we will delve further into key techniques to help you become a better bug hunter. Today’s session will provide you with practical advice and practical experience to help you discover more vulnerabilities and be successful in bug bounty programs, no matter your level of experience. Let us get started!

We will be concentrating on additional reconnaissance-related subjects today. We will begin by discussing wordlists, their significance, and the reasons that technology-specific lists, industry-level wordlists like SecList, robots.txt, and raft are necessary. Additionally, we will learn how to use commonspeak to create our own wordlists. After that, we will use tools like crt.sh to perform subdomain enumeration before delving into certificate parsing. We will also go over using tools like Sublist3r.py and Gobuster to bruteforce subdomains. Lastly, in order to help accelerate our recon work, we will discuss automating the screenshotting process.

What Are Wordlist ? Why Do We Need Them In Bug Bounty

Just a list of words, a wordlist is frequently used in security testing, especially for activities like enumerating potential vulnerabilities or brute-forcing. Wordlists are used in bug bounty hunting to identify the potential names or routes of hidden resources, such as folders, files, or subdomains. Common terms found in these lists may relate to the names of files, directories, or subdomains on a server or website.

#Example Of A Wordlists

1chocolate

1kauk0wsky1

1q2w3e4r

1q2w-3e

1qazxcvb

1yuni=mori=2

2b636468

2ezwga2t

3bubbles

3idmobarak

3jesus3

04mayravi

4u2cmesk8

05monkey

07caardelean

7dejulio

8password

8reddevil

11jan85

12alexWhy Do We Need Wordlists in Bug Bounty?

Wordlists are crucial to bug bounty hunting because they help you to easily find hidden sections of a website or program that you may not have direct access to. Without wordlists, it would be time-consuming and ineffective to manually guess or search for every potential name.

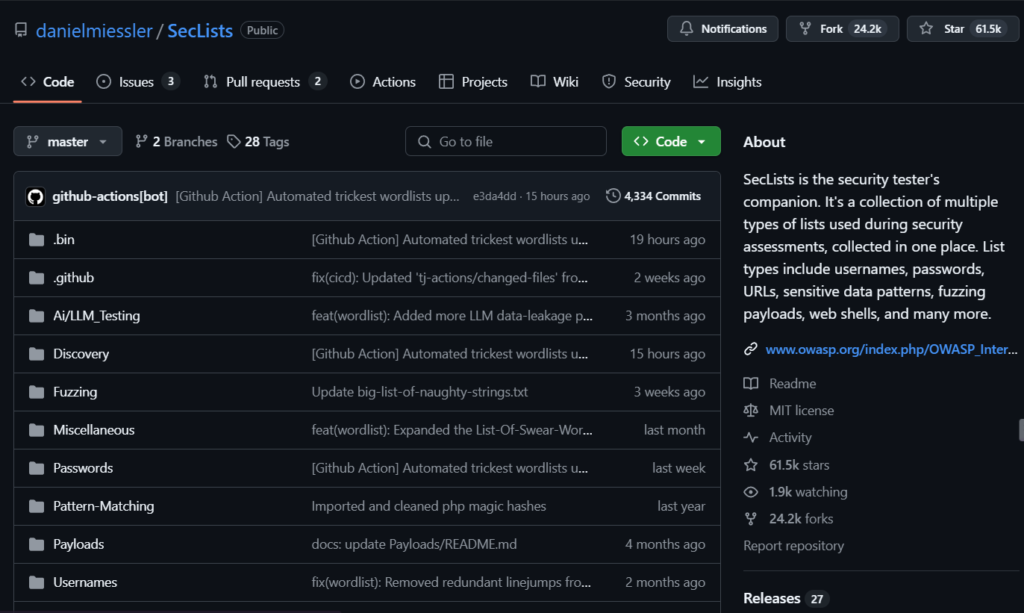

Famous Wordlists

You can refer to use this wordlist when you set youself up for hunting. (They Are’t Promoted Through)

Seclists

Although Seclists is usually pre-installed on Kali Linux, you can use the following command to install it if it isn’t:

RockYou.txt

FuzzDB/wordlists

For online application testing, FuzzDB is a huge library of attack patterns, payloads, and wordlists. It is frequently employed in penetration testing and fuzzing.

How To Take Out Juice From Robots.txt

Websites utilize a plain text file called robots.txt to tell bots and search engine crawlers whether parts of the site should or should not be indexed or scanned. It is located in the site’s root directory (http://example.com/robots.txt, for example).

How Can You Use robots.txt in Bug Bounty?

The robots.txt file is a useful reconnaissance tool in bug bounty hunting. It might provide you information about hidden or sensitive directories and files that the owner of the website does not want search engines to index .Possible attack areas that might be investigated further for weaknesses.

Workflow : Robots.txt

Step 1: Accessing the robots.txt file

Simply enter the robots.txt URL in your browser or use cURL in the terminal to obtain the robots.txt file of a website.

Step 2: Interpreting the robots.txt for Bug Bounty

- Disallowed Paths:

/admin/(admin panel, often a potential attack surface)/private/(could contain sensitive user data)/login/(login page, may have login vulnerabilities)/config/(configuration files, could contain sensitive information)

- Allowed Paths:

/public/(publicly accessible, no security risk)/images/(images folder, typically public files)

And That Is It When You Go Dig deeper by accessing this folder don’t worry the vulnerabilites will be taught to you soon by me.

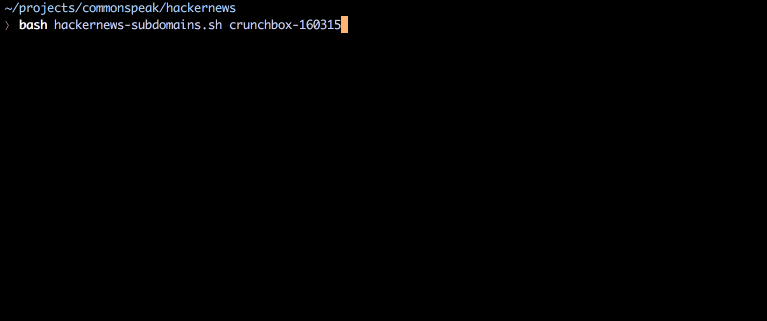

Using Common Speak To Generate Our own Wordlists

Commonspeak creates wordlists using information from the vast online archive Commoncrawl. A script included in the repository can be used to retrieve data from Commoncrawl. You can create a wordlist after downloading and processing the Commoncrawl data. To create it using the Commoncrawl data, execute the command below:

Subdomain Enumeration

Finding every subdomain connected to a target domain is known as subdomain enumeration. Subdomains may contain www.example.com, blog.example.com, admin.example.com, and so forth, for instance, if you are targeting the domain example.com.

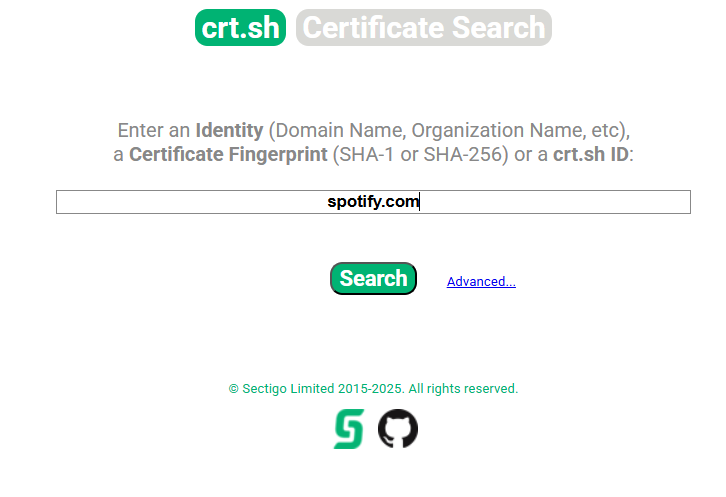

Using crt.sh

To query for Spotify.com type it in the search bar on the crt.sh site

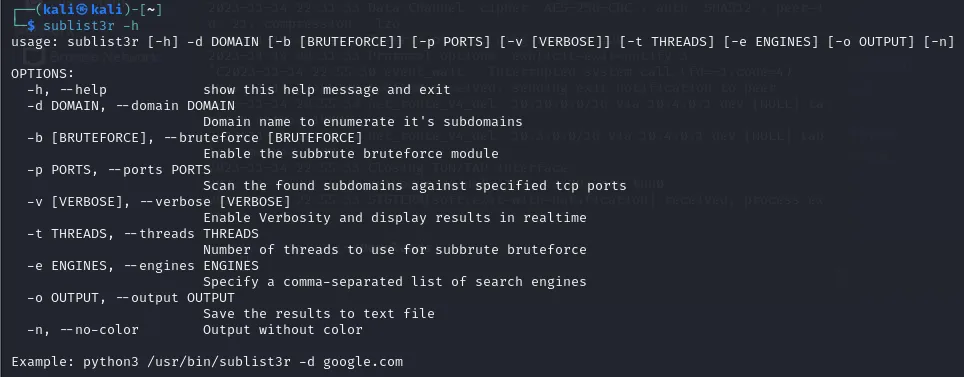

Enumerate Subdomains Via Sublist3r

One effective tool for automating the subdomain enumeration process is Sublist3r. It is an open-source Python script that collects subdomain data from a variety of sources, such as social networking sites, passive DNS databases, and search engines, using a variety of methods.

sudo apt install sublist3r

To list subdomains for a certain target domain—for instance, example.com—run the following command.

sublist3r -d example.com

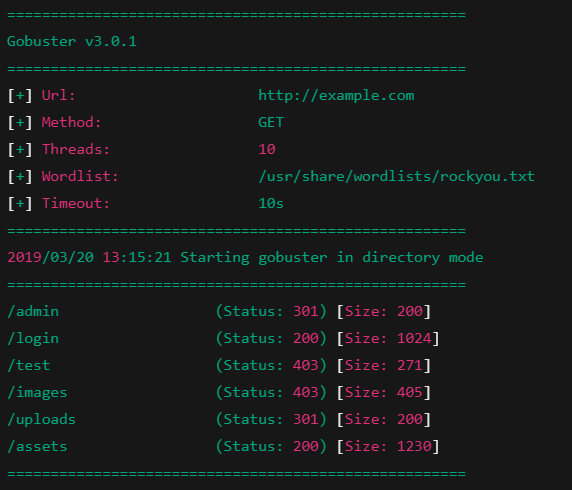

Enumerate Subdomains Via Gobuster

To Install Gobuster on your system use the following command on your linux (kali) machine

$ gobuster -h

Usage:

gobuster [command]

Available commands:

dir Uses directory/file enumeration mode

dns Uses DNS subdomain enumeration mode

fuzz Uses fuzzing mode

help Help about any command

s3 Uses aws bucket enumeration mode

version shows the current version

vhost Uses VHOST enumeration mode

Flags:

--delay duration Time each thread waits between requests (e.g. 1500ms)

-h, --help help for gobuster

--no-error Don't display errors

-z, --no-progress Don't display progress

-o, --output string Output file to write results to (defaults to stdout)

-p, --pattern string File containing replacement patters

-q, --quiet Don't print the banner and other noise

-t, --threads int Number of concurrent threads (default 10)

-v, --verbose Verbose output (errors)

-w, --wordlist string Path to the wordlist

We have discussed a number of important subjects in this course, including subdomain enumeration, robots.txt analysis, and wordlist development. We looked at how resources like Google search, Sublist3r, and Commonspeak2 might help us in obtaining important data during the reconnaissance stage. We also talked about the importance of knowing subdomains, how to find them, and why bug bounty hunting depends on them.

We will start the exploitation phase tomorrow, concentrating on finding and exploiting quick wins such as subdomain takeover, data leaks from GitHub, and improperly setup cloud buckets. After that, we will examine more complex vulnerabilities like SQL Injection, IDOR (Insecure Direct Object References), SSRF (Server-Side Request Forgery), and XSS (Cross-Site Scripting). We will go over how to properly find, take advantage of, and report these vulnerabilities.