Introduction

In this blog we will be covering Waybackurls For Hackers . Web crawling is a crucial component in the field of security testing. This procedure involves employing automated scripts or crawling programmes to index data on web pages. These scripts—also referred to as web crawlers, spiders, spider bots, or just crawlers—are essential for identifying security flaws and evaluating a web domain’s level of protection.

Understanding Web Crawling

Scanning the web in a methodical manner to gather data from webpages and index it for different uses is called web crawling. Recursively retrieving stuff, it organises it for analysis, and it does this by following hyperlinks. Web crawling helps find possible points of entry, weak points, and places that could be exploited in security testing.

Importance in Security Testing

Web crawling is a fundamental method for reconnaissance and vulnerability evaluation in the context of security testing. It assists in locating potential security holes, hidden pathways, and breaches of sensitive data by methodically navigating web domains. By using automated scripts and crawling programmes, security experts may quickly collect information and evaluate the target domain’s risk profile.

Installation of Waybackurls For Hackers on Kali Linux Machine

We will use the Waybackurls tool on a Kali Linux system to harness the power of web crawling in vulnerability testing. Observe these procedures to install it:

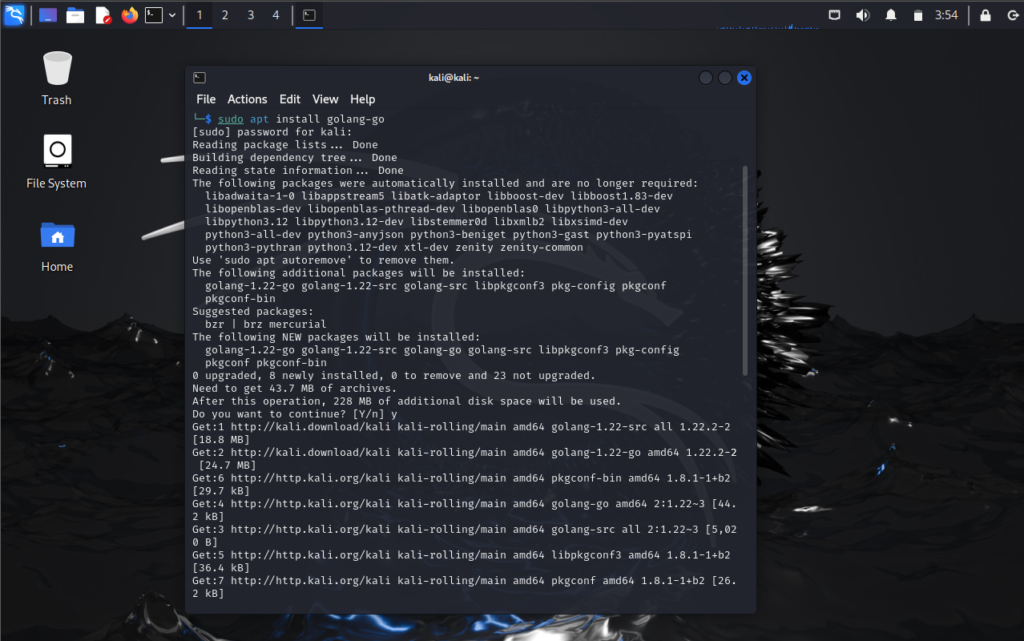

Step 1: Verify Golang Installation

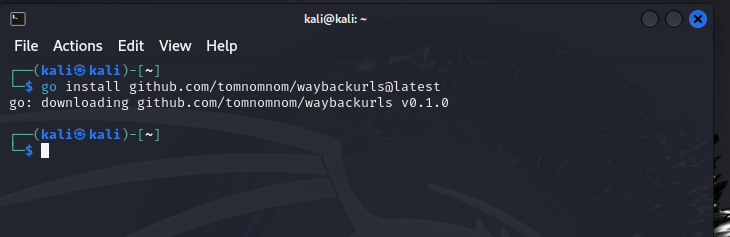

go versionStep 2: Install Waybackurls

sudo go install github.com/tomnomnom/waybackurls@latest

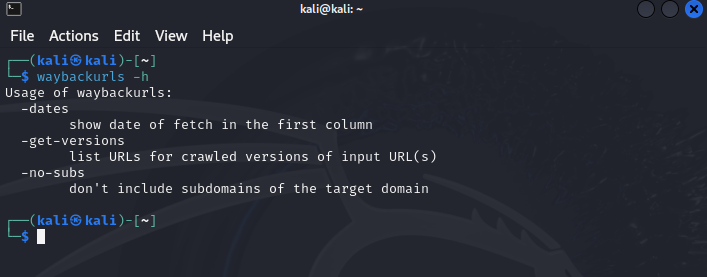

└─$ sudo cp go/bin/waybackurls /usr/local/bin/.Step 3: Explore Tool’s Functionality

waybackurls -hWorking with Waybackurls Tool

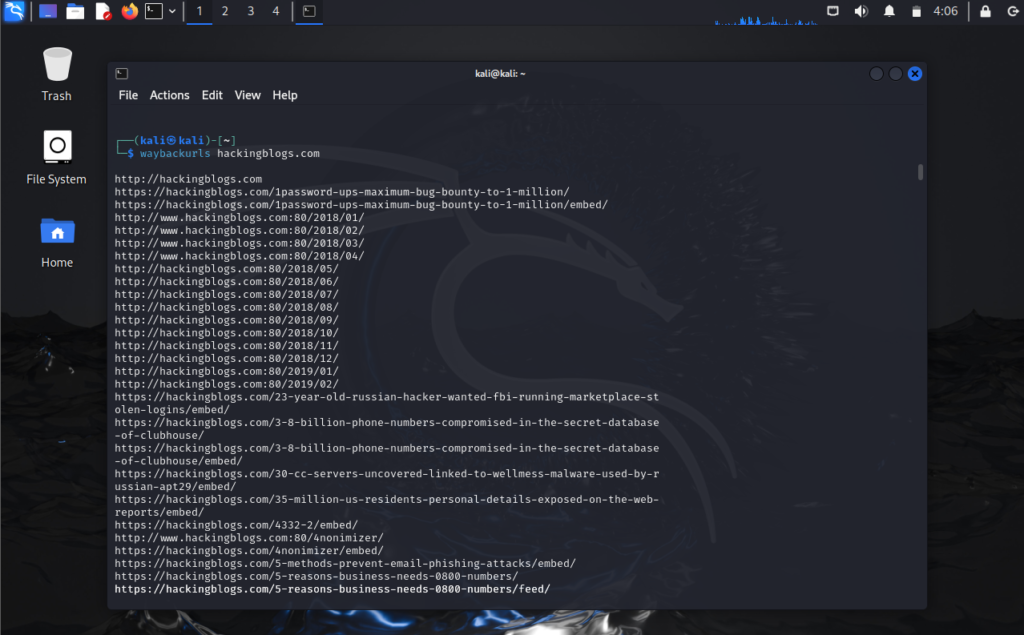

Example 1: Simple Scan

Let’s initiate a basic scan using Waybackurls:

waybackurls hackingblogs.comThis command will collect all possible Wayback URLs from the target domain and display them in the terminal.

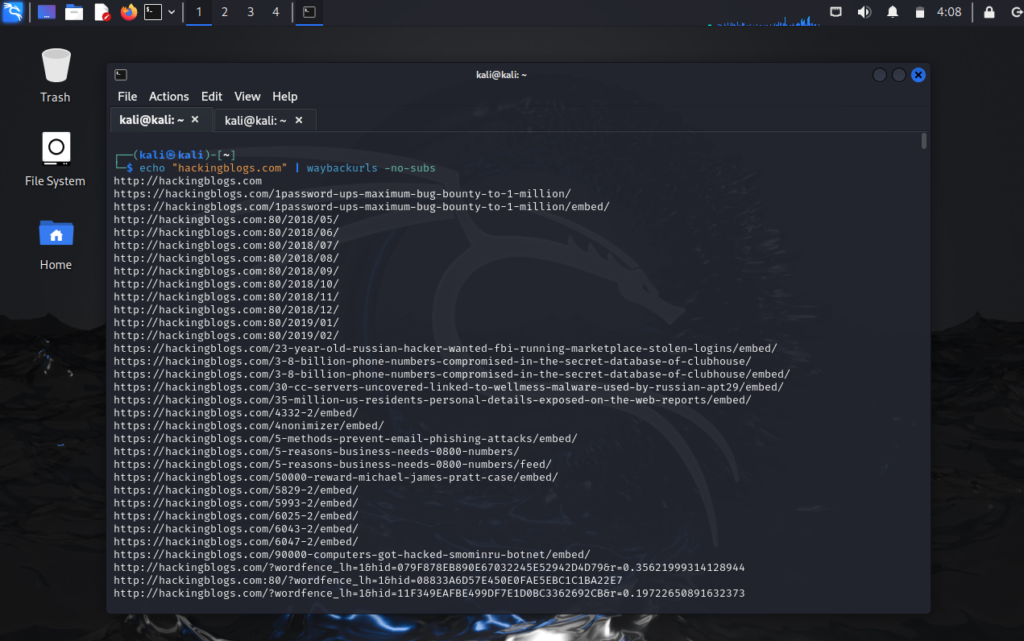

Example 2: Using -no-subs Tag

To restrict URLs to the main domain, use the following command:

echo "hackingblogs.com" | waybackurls -no-subsThis command ensures that only URLs directly associated with the main domain are fetched, excluding subdomains.

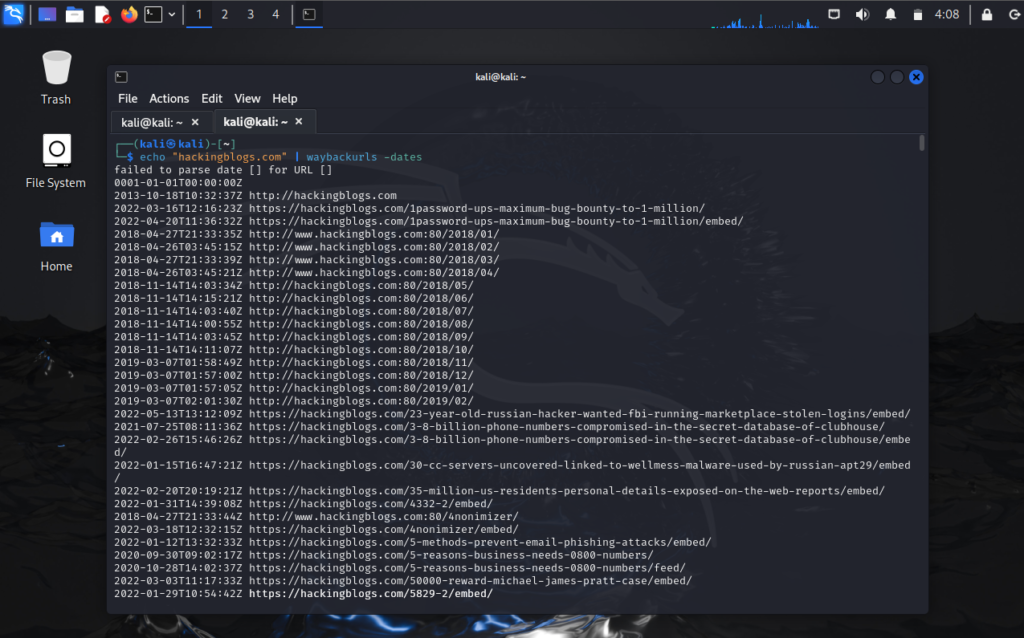

Example 3: Using -dates Tag

For obtaining the dates of URL fetches, employ the -dates tag:

echo "hackingblogs.com" | waybackurls -datesThis command displays the fetching dates of URLs, providing insights into historical data availability.

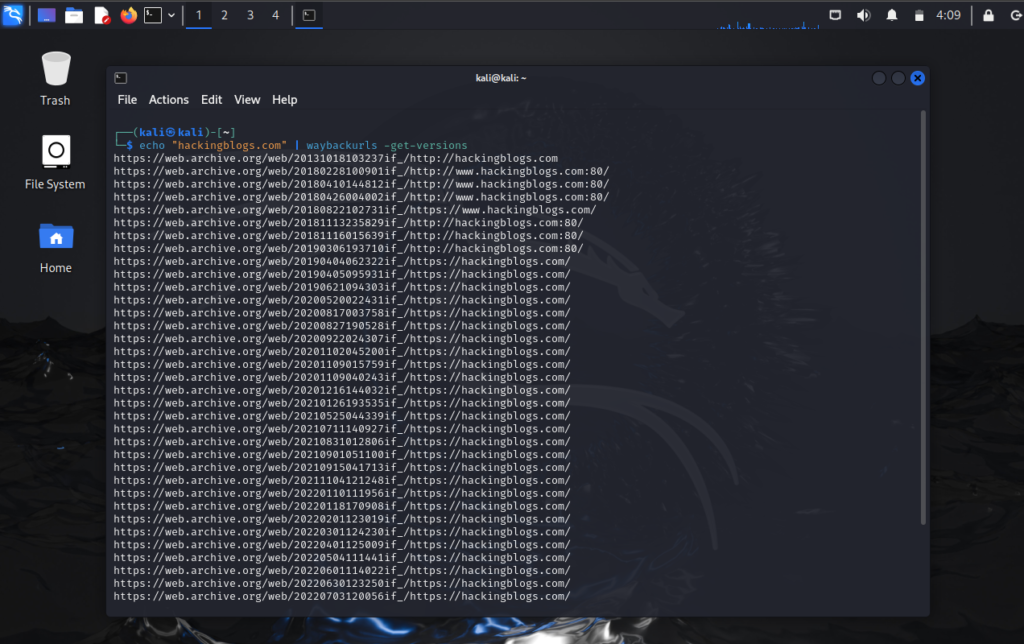

Example 4: Using -get-versions Tag

o retrieve versions of URLs along with their sources, utilize the -get-versions tag:

echo "hackingblogs.com" | waybackurls -get-versionsThis command provides additional context by indicating the sources from which URLs were crawled.

FAQs

- What is the significance of web crawling in security testing?

Web crawling facilitates the systematic discovery of vulnerabilities, sensitive information leaks, and potential security gaps in web domains. - Is Waybackurls suitable for all operating systems?

Waybackurls is primarily developed for Golang environments; thus, it’s compatible with various operating systems, including Linux, macOS, and Windows. - Can Waybackurls be integrated into automated security testing pipelines?

Yes, Waybackurls can be integrated into automated testing pipelines to streamline reconnaissance and vulnerability assessment processes. - Is it legal to use Waybackurls for reconnaissance purposes?

It is important to use Waybackurls responsibly and ensure that you have permission to conduct reconnaissance on the target domain before using the tool. - Can Waybackurls be used to discover hidden subdomains of a target domain?

Yes, Waybackurls can help uncover hidden subdomains that may not be easily accessible through traditional methods. - Are there any limitations to using Waybackurls for hacker commands?

The effectiveness of Waybackurls may be limited by the availability of archived URLs on the Wayback Machine and the accuracy of the results.

Conclusion

A key tool for security testing is web crawling, which makes comprehensive reconnaissance and vulnerability assessment possible. Security experts may systematically examine web domains, find historical data, and spot possible security threats by utilising tools like Waybackurls. By incorporating web crawling into security testing processes, security assessments become more effective, which in turn strengthens digital ecosystems’ resistance to cyberattacks.

Feel free to check more interesting blogs on this website and i will be seeing you in the next video.